Alpha Release - Completely Free

Know How Your AI Agent Works

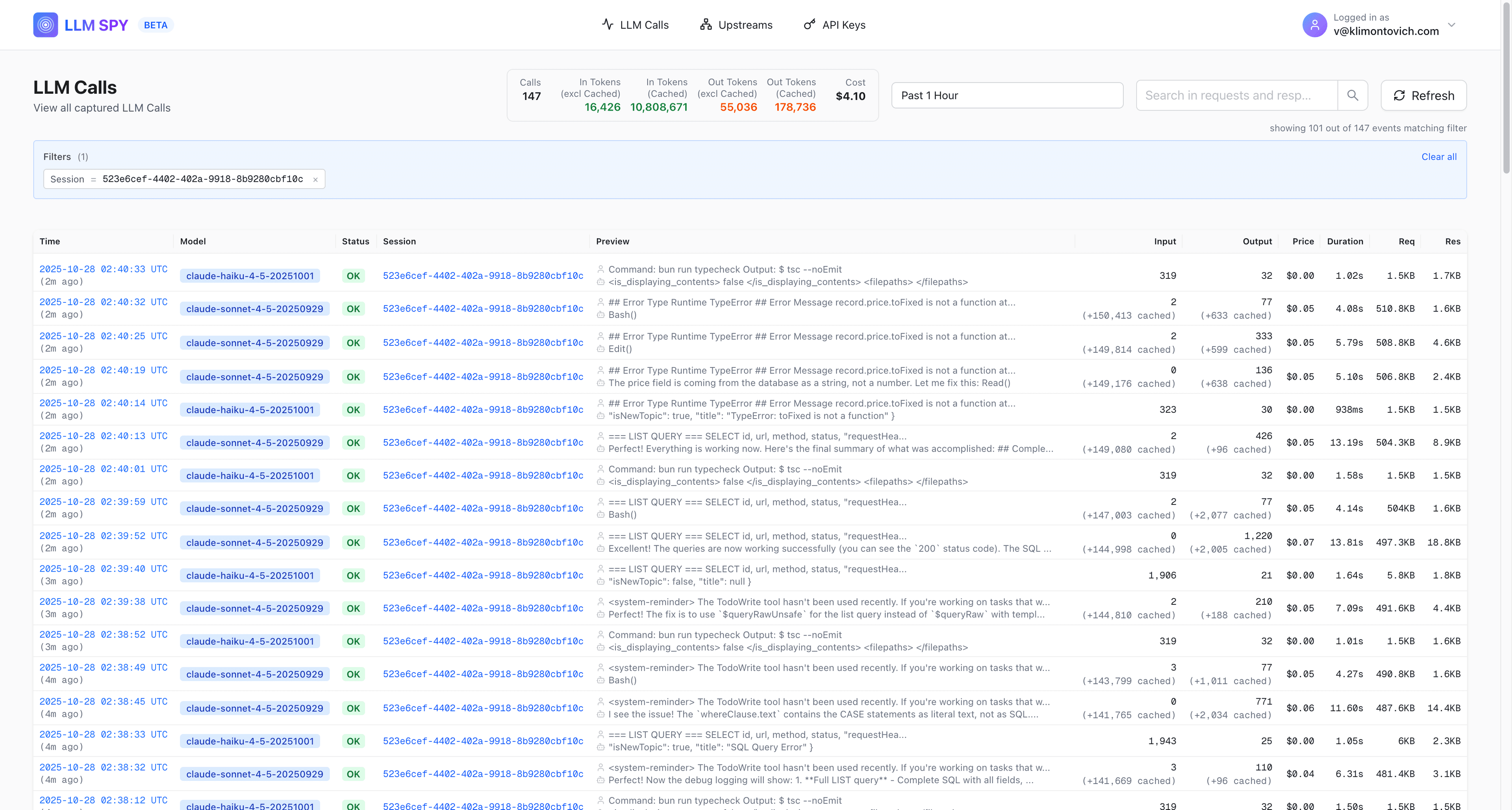

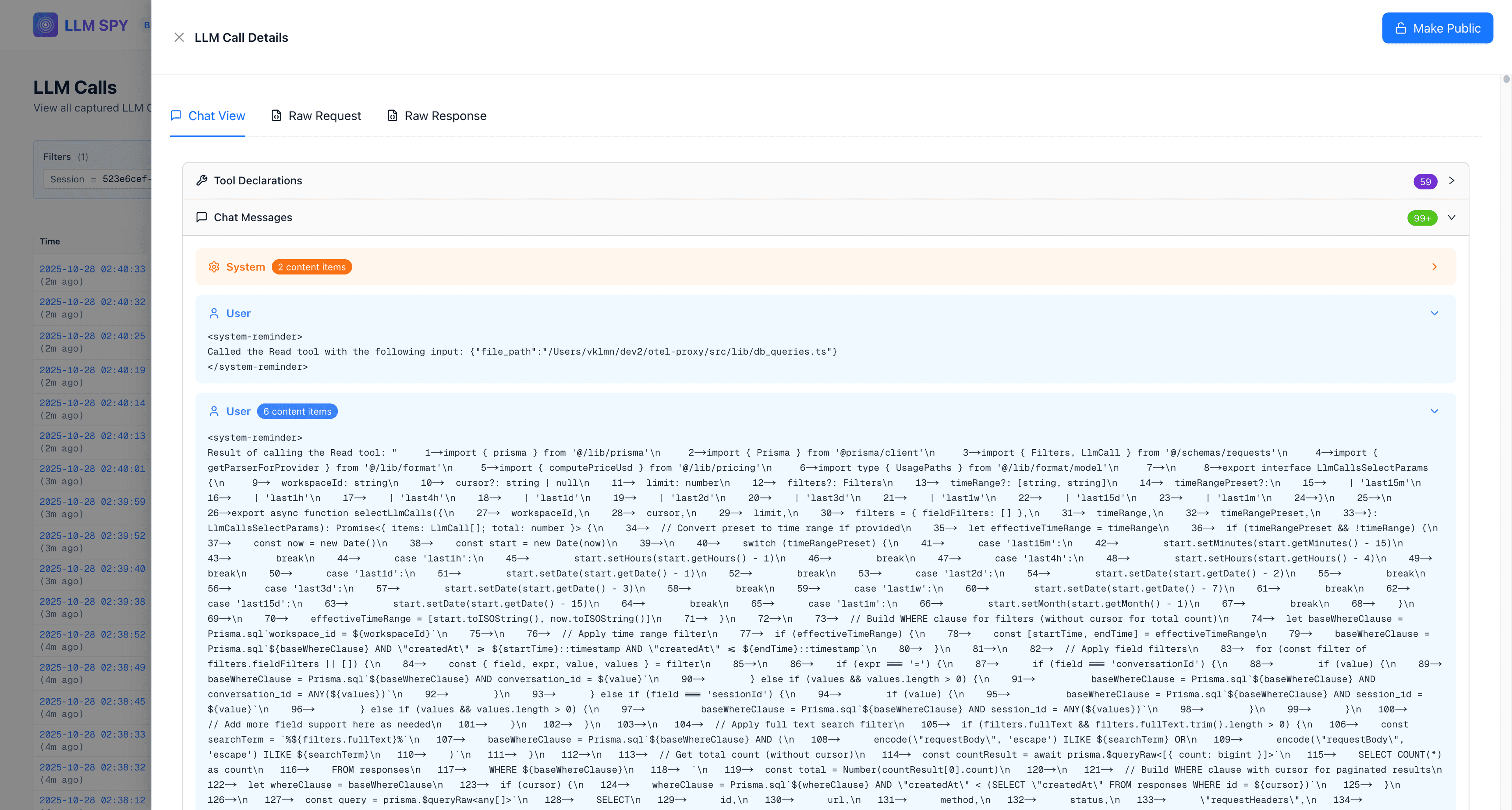

LLM SPY records traffic between your AI Agent and LLMs, revealing exactly what it does - every prompt, tool call, and decision.

llm-spy-monitor

ANTHROPIC_BASE_URL="https://llms.klmn.sh" \ ANTHROPIC_CUSTOM_HEADERS="x-proxy-auth: KEY" \ claude > Build me a LangChain agent that teaches me how to cook sushi

See the results

https://llms.klmn.sh/workspace-1/requests

How It Works

Set up in minutes, monitor everything instantly

Create a Proxy

Point your AI agent to LLM SPY instead of the LLM provider directly. Just change one environment variable and you're monitoring.

Monitor Everything

View all LLM traffic in real-time. See prompts, responses, tool calls, token usage, and execution flow.

Share Conversations

Share specific LLM conversations with your team via secure, secret links. Perfect for debugging, collaboration, or demonstrating AI behavior.

Example Use Case

Claude Code Monitoring

Monitor what Claude Code actually does by setting ANTHROPIC_BASE_URL

See all prompts and context

Track tool usage patterns

Monitor token consumption

Examples

Select an upstream, key, and platform to view connection instructions.

Select Upstream

anthropic

Select API Key

sk_demo_api_key

Select Platform

Select a platform

Why LLM SPY?

Complete transparency and control over your AI agents

Complete Visibility

Know exactly what your LLM does - every prompt, response, tool call, and decision. No more black box AI.

- Full prompt history

- Tool execution logs

- Token usage tracking

Instant Setup

Just change one environment variable and you're monitoring. No SDK integration, no code changes required.

- One-line setup

- Works with any agent

- Zero performance impact

LLM Translation Gateway

Coming SoonUse any LLM through any interface. Your app talks to 'Anthropic' but actually uses GPT-4.

- Format conversion

- Provider switching

Coming Soon

Session Intelligence

Automatically groups related LLM interactions into coherent sessions, giving you the full conversation context.

Smart Conversation Tracking

LLM SPY intelligently detects and groups related requests into sessions. See the complete flow of multi-turn conversations, not just isolated API calls.

Automatic Session Detection

Identifies conversation patterns and links related messages together. No manual tagging or session IDs required.

Full Conversation Context

View entire conversation threads with preserved context. Understand how your agent builds on previous exchanges.

Session #A7B2

Request 1 • 14:23:01

→ "Create a Python function to parse JSON"

Response 1 • 14:23:02

← "def parse_json(data): ..."

Request 2 • 14:23:15

→ "Add error handling to that function"

Response 2 • 14:23:16

← "try: ... except JSONDecodeError: ..."

Automatically grouped into one session

Coming Soon

LLM Translation Gateway

Make any LLM speak any language. Your app talks to "Anthropic" but actually uses GPT-4, or vice versa.

Universal Format Translation

LLM SPY acts as a translation layer between your application and any LLM provider. Switch between models without changing a single line of code.

Automatic Format Conversion

Seamlessly converts between Anthropic, OpenAI, Google, and other LLM formats. Use any model with any interface.

Full Request Visibility

See exactly how requests are translated between formats. Debug and understand the conversion process in real-time.

# Your app thinks it's using Claude...

ANTHROPIC_BASE_URL="https://llms.klmn.sh" \

ANTHROPIC_CUSTOM_HEADERS="x-proxy-auth: key" \

claude

> Build me a LangChain agent that teaches me how to cook sushi

# But actually uses GPT-4 via translation gateway

Frequently Asked Questions

Everything you need to know about LLM SPY

Pricing

Free to Use

$0

No cost while hosting is manageable

It's free while it doesn't incur significant hosting or storage costs. We may start charging if it becomes an issue, but will keep it affordable.

Start NowReady to See What Your AI Really Does?

Join the alpha and get complete visibility into your AI agents

Get Started for Free